Bike Motion Analysis

UFM demonstrates exceptional performance in tracking complex motion patterns of bicycles in urban environments.

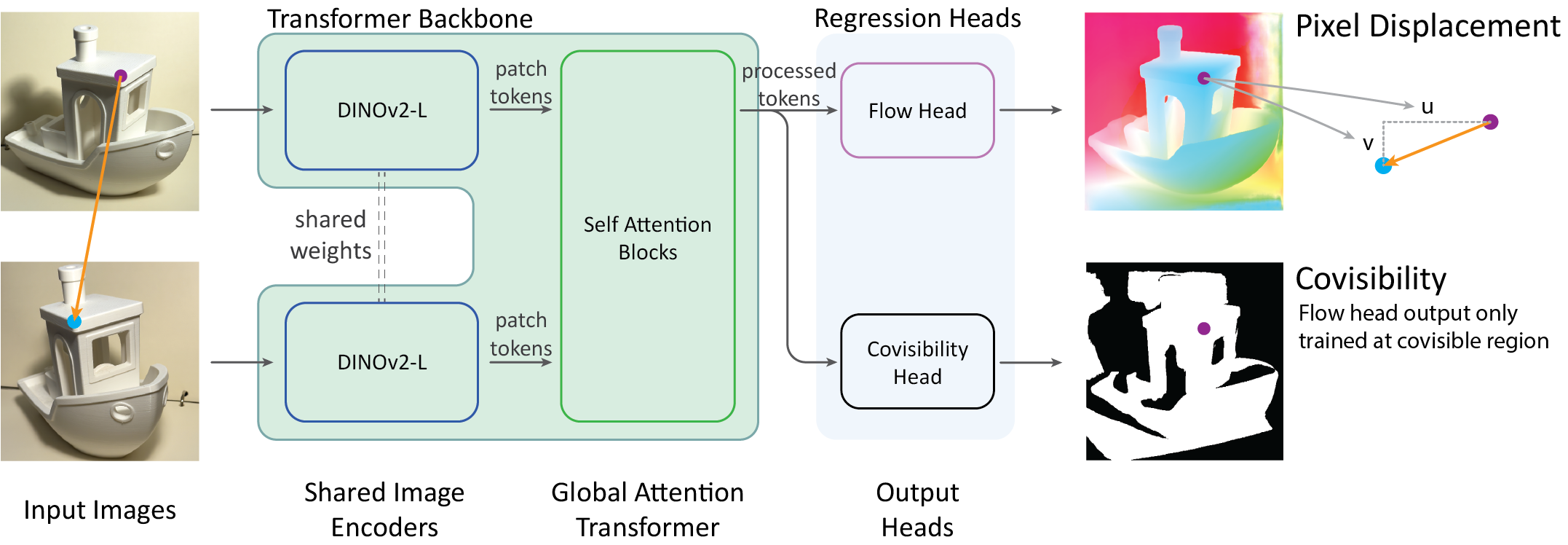

TLDR: UFM is a simple, end-to-end trained transformer model that directly regresses pixel displacement images (flow) & covisibility which can be applied to both optical flow and wide-baseline matching tasks with high accuracy and efficiency.

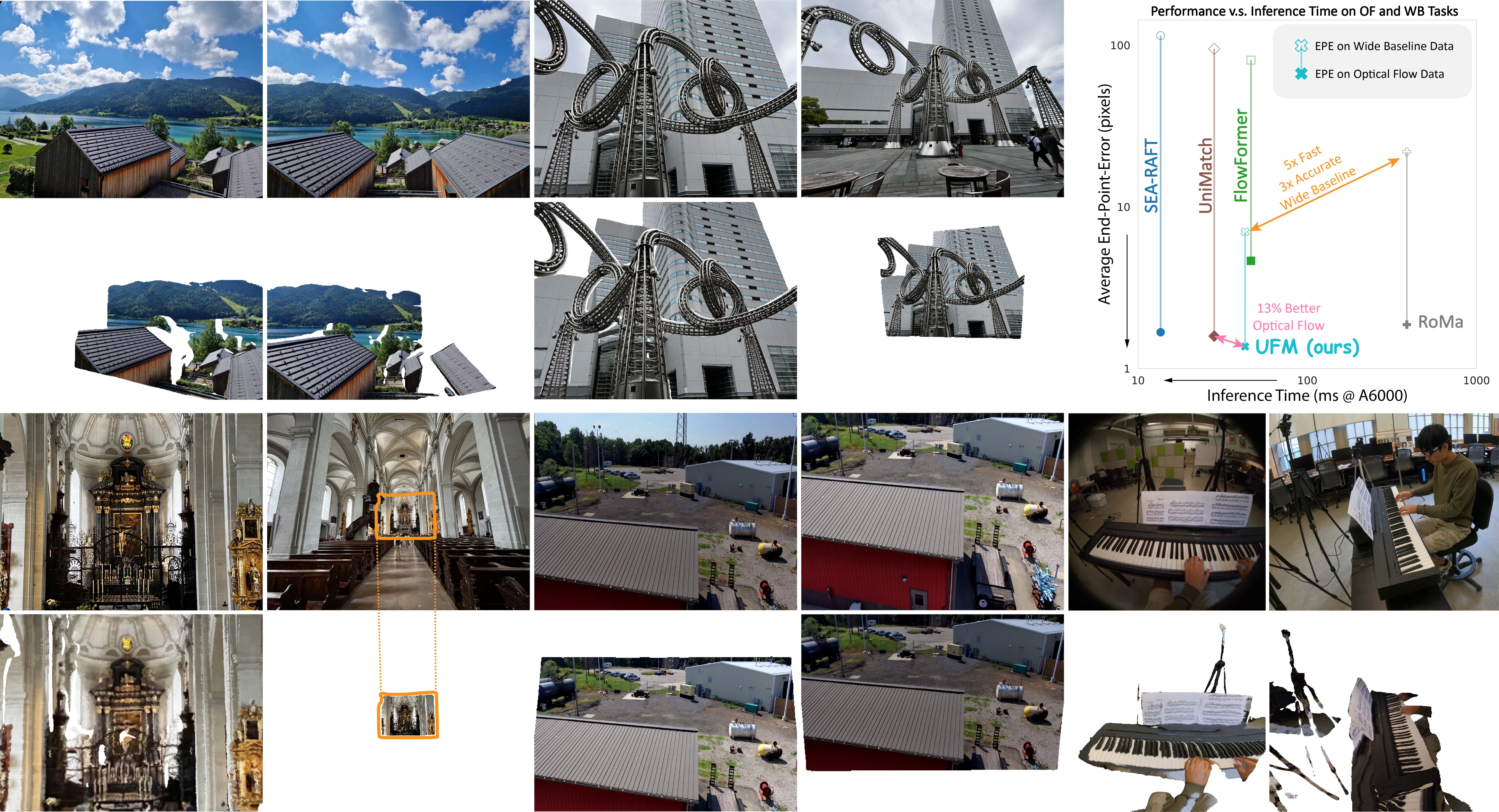

Interpolations of UFM's dense 2D correspondence show strong sense of 3D motion!

UFM achieves high accuracy and efficiency for both in-the-wild optical flow and wide-baseline matching tasks.

Dense image correspondence is central to many applications, such as visual odometry, 3D reconstruction, object association, and re-identification. Historically, dense correspondence has been tackled separately for wide-baseline scenarios and optical flow estimation, despite the common goal of matching content between two images. In this paper, we develop a Unified Flow & Matching model (UFM), which is trained on unified data for pixels that are co-visible in both source and target images. UFM uses a simple, generic transformer architecture that directly regresses the (u,v) flow . It is easier to train and more accurate for large flows compared to the typical coarse-to-find cost volumes in prior work. UFM is 28% more accurate than state-of-the-art flow methods (Unimatch), while also having 62% less error and 6.7x faster than dense wide-baseline matchers (RoMa). UFM is the first to demonstrate that unified training can outperform specialized approaches across both domains. This enables fast, general-purpose correspondence and opens new directions for multi-modal, long-range, and real-time correspondence tasks.

UFM employs a simple end-to-end transformer architecture. It first encode both images with DINOv2, and then process the concatenated features with self-attention layers. The model then regresses the (u,v) flow image and covisibility prediction through DPT heads. We trained the model on a combined dataset from 12 optical flow and wide-baseline matching datasets, showing mutual improvement on both tasks.

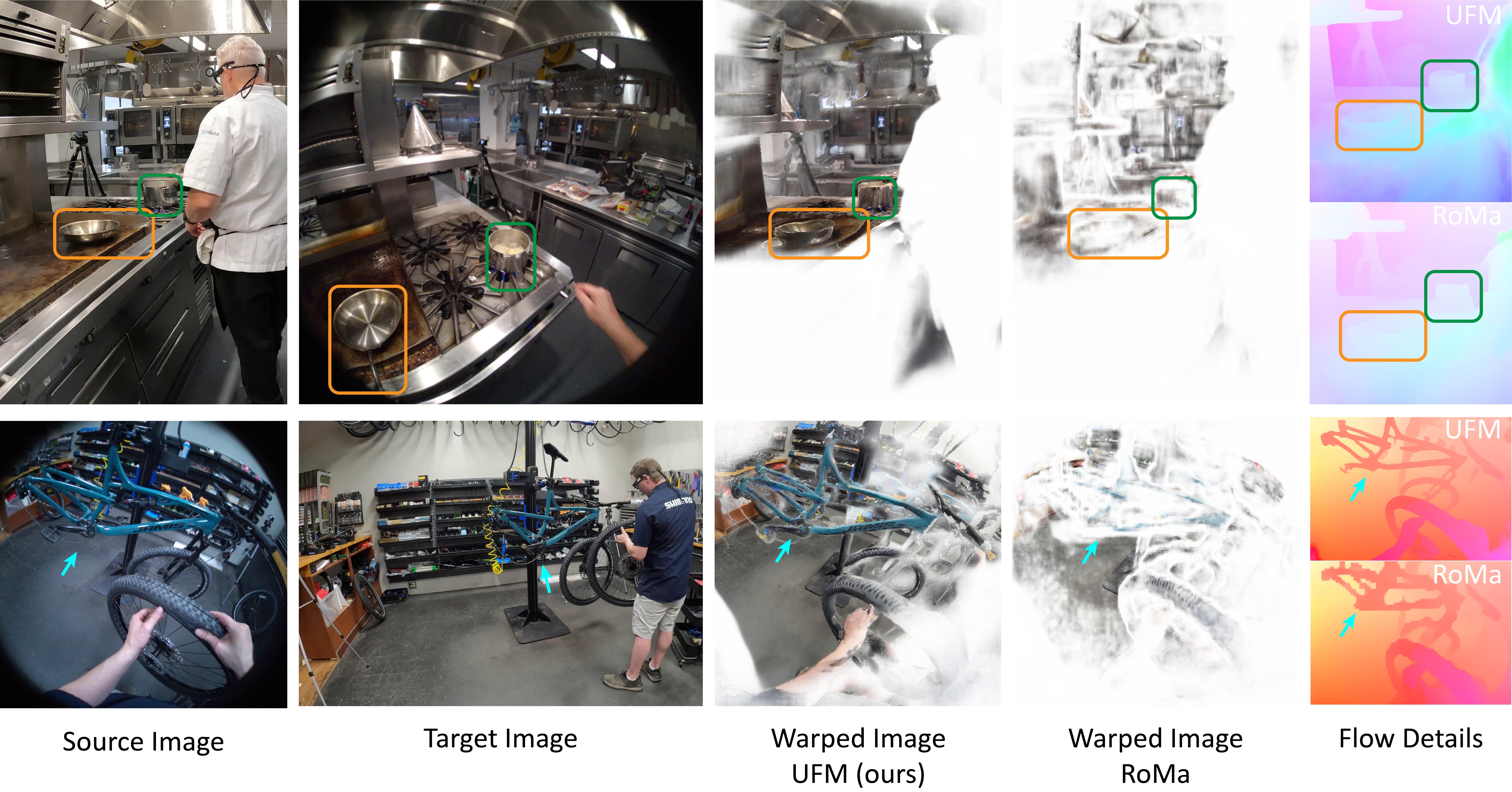

We visualize UFM's predictions through warping the target image back to the source image with the predicted flow image.

UFM demonstrates exceptional performance in tracking complex motion patterns of bicycles in urban environments.

UFM holds significant advantage and generalizes to unseen environment, geometric perspective, and camera models.

@inproceedings{zhang2025ufm,

title={UFM: A Simple Path towards Unified Dense Correspondence with Flow},

author={Zhang, Yuchen and Keetha, Nikhil and Lyu, Chenwei and Jhamb, Bhuvan and Chen, Yutian and Qiu, Yuheng and Karhade, Jay and Jha, Shreyas and Hu, Yaoyu and Ramanan, Deva and Scherer, Sebastian and Wang, Wenshan},

booktitle={arXiV},

year={2025}

}